Hi everyone,

I’m trying to use a Vicon system to send position information to a quadrotor for indoor flight testing. I have an onboard Odroid computer that receives information from the Vicon system and sends this to a Pixracer using mavros. I’ve been able to confirm using QGroundControl that the Vicon data is consistently being received by my Pixracer without loss of data but I’m noticing that my x and y local position estimates consistently diverge from the values coming from the Vicon system.

Here is a link to the Flight Review upload from one test that I did: https://logs.px4.io/plot_app?log=a2655663-a519-4a10-b68a-5502681c0f84

Context: I’m using an untouched copy of stable release v1.8.2 on my drone. I’ve removed the four propellers from my quadrotor and in the test I’m holding the drone in the Vicon field and manually moving it around. From Flight Review, you can see that my x and y positions repeatedly diverge, jump to 0 and then return to reasonable values.

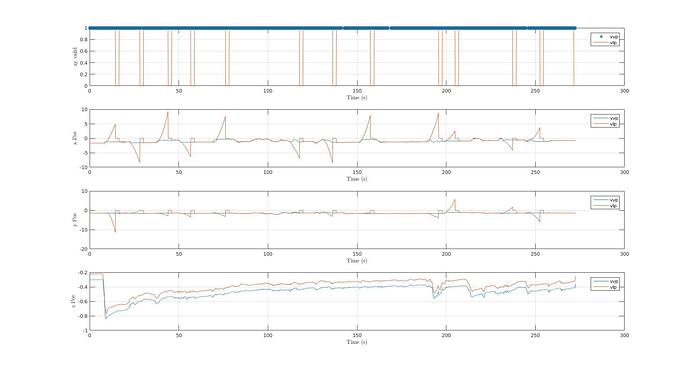

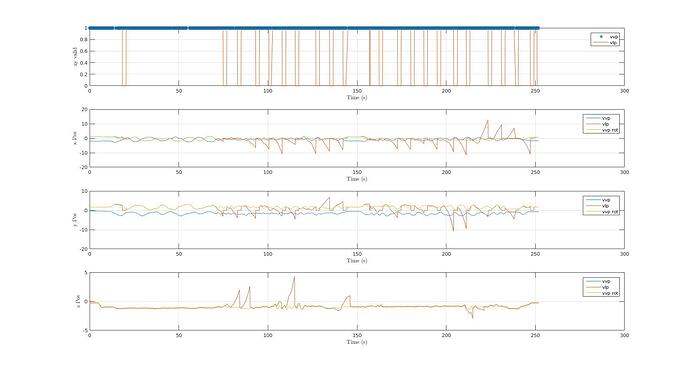

I also post processed the flight log using MATLAB and produced this image which I think really illustrates my problem:

In these graphs, I’m plotting data being logged from the vehicle_local_position topic (vlp, orange) and from the vehicle_vision_position topic (vvp, blue). The first graph shows the .xy_valid element of each of these topics and the subsequent plots show the x, y and z position data. What really confuses me about these results is that the state estimator does not seem to be properly making use of the Vicon data to get the x and y position. Every time the local position estimate diverges, the drone still has access to Vicon data but it seems to take several seconds before this gets flagged by the xy_valid element and then corrected by converging back to the Vicon value. I’ve been digging into the EKF code to try and figure out why this is happening but I haven’t had any luck yet.

I would greatly appreciate any help with this issue!

Thanks

Sean