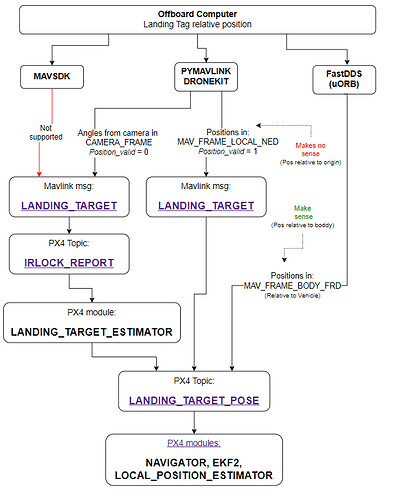

I am currently attempting MAVLink assisted precision landing with an aruco marker according to https://docs.px4.io/main/en/advanced_features/precland.html#offboard-positioning. I’m programming in Python using MavSDK. However, it’s unclear to me how to provide the LANDING_TARGET message.

This page says at the bottom that PX4 only supports MAV_FRAME_LOCAL_NED as MAV_FRAME. so, the landing messages have to contain the distance of the marker to the home position in metres?

This sounds very strange to me. In this case, I would only need to measure the distance of the marker and the start position once. In fact, I don’t even need a marker. Not to mention, everything would be down to gps accuracy.

So, what am I misunderstanding here? I didn’t find any example or documentation that made MavSDKs usage of coordinate systems clear to me.

Also, the article on Offboard landing says that only x, y and z are used. So you can’t make the drone achieve a correct yaw position by providing quaternions to the v2 message?