I’ve been running some tests with vehicle_visual_odometry in simulation to see how I could use it for CV localization. In my setup, I’m getting ground truth poses from Gazebo and using MAVROS to send them to PX4. I then increased the simulated GPS noise and commanded the drone to fly to a fixed location in the local frame, and compared the local position estimate with groundtruth. If my understanding is correct, then I would expect the local position estimates to closely match what I’m sending in through vehicle_visual_odometry, which would be the ground truth.

However, I noticed a large offset between the local position estimate and the ground truth poses I’m feeding in and I can’t figure out why this is the case. Logfile here: https://logs.px4.io/plot_app?log=997a811d-9ce6-4179-ae50-035b1c380d52

Things I’ve checked:

vehicle_visual_odometrytopic is being published to uORB and matches groundtruth- EKF2 is using the data somehow, because if I feed incorrect data (e.g. all 0s) I can see the estimates all getting substantially worse

- (Co)variances are set very small (0.001 for position)

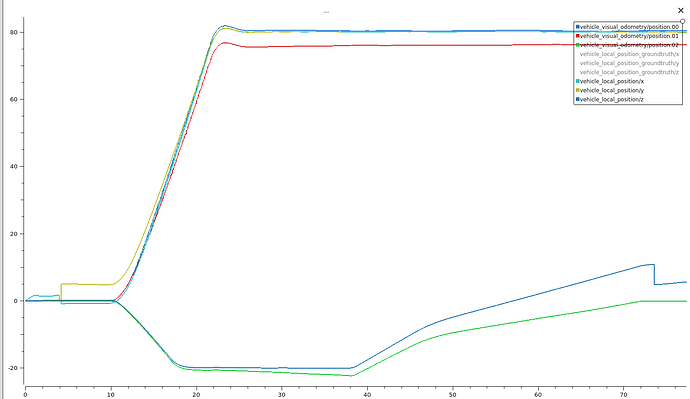

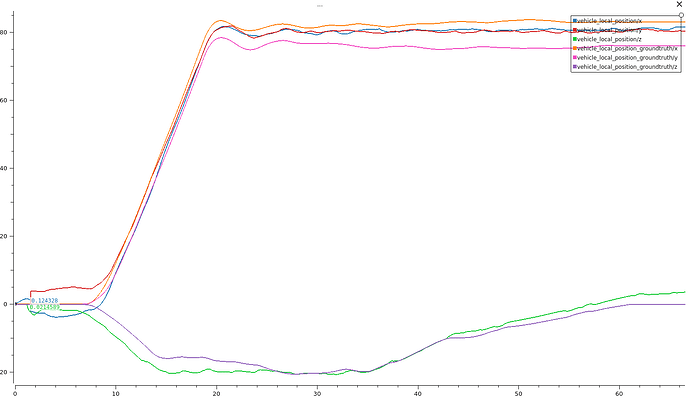

The plot below shows vehicle_visual_odometry (which matches groundtruth) against the local position estimator output. The offsets in Y and Z are clearly visible.

Am I just misunderstanding how vehicle_visual_odometry is intended to work? Is there something wrong with my experiment setup?

I’ve also compared the above to the ground truth vs. local estimator output for the case without ground truth feedback. In this case the estimator output is a lot less smooth, so EKF2 is definitely doing something with the feedback. In the end however the amount of position error is roughly the same as with feedback.