Based on Offboard, Visual Positioning, EKF2, Control-2

In the third stage, we used vision position as the horizontal position source and range sensor as the altitude position source; GPS was completely disabled. Two different methods were used to pass visual positioning data to PX4:

Way 1: Broadcast TF tree.

①We modified the ‘vision_pose_estimate’ part in ‘px4_config.yaml’: Change ‘listen’ from ‘False’ to ‘True’. Set ‘frame_id’ as ‘map’. Set ‘child_frame_id’ as ‘base_link’. (‘map’ is the local ground coordinate system, ‘base_link’ is the body coordinate system.)

②We wrote a ROS node to broadcast the TF tree:

③Based on original parameter settings, we set parameters related to visual positioning, which include EKF2_GPS_CTRL (0), EKF2_EV_CTRL (13).

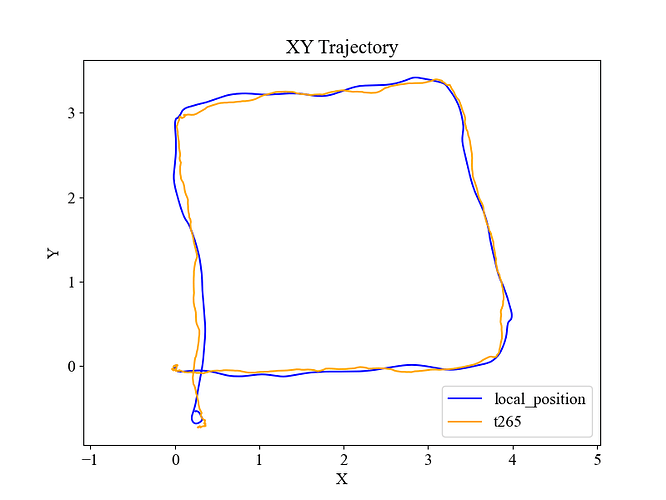

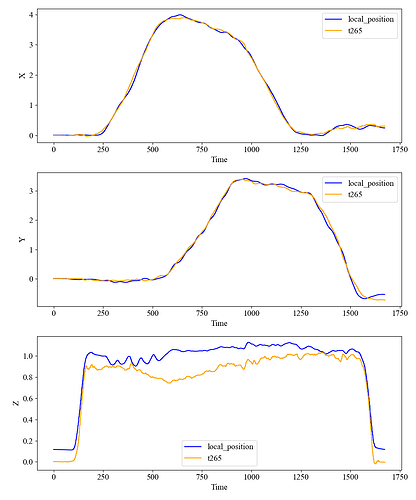

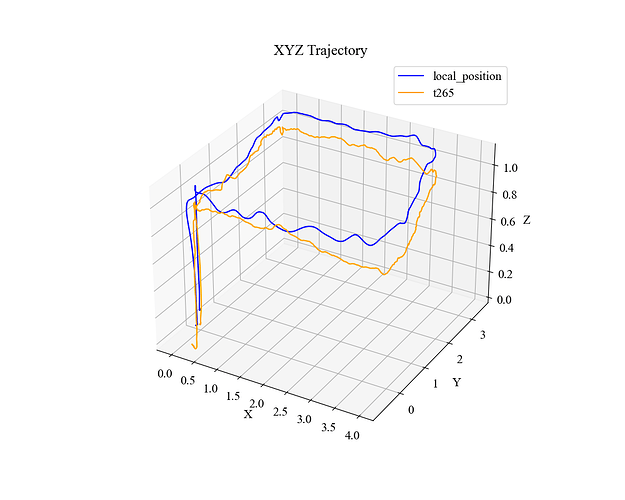

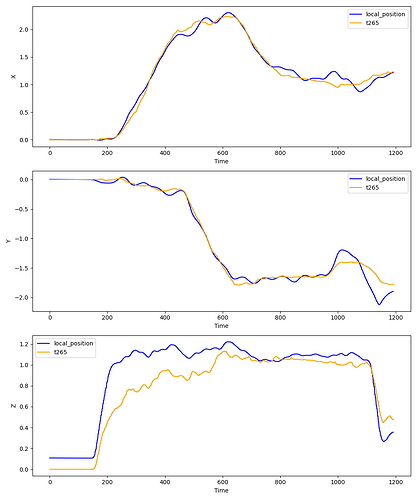

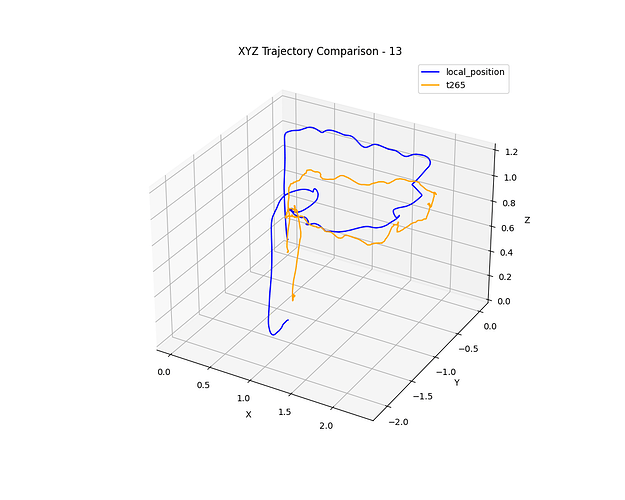

④After powering on the UAV, we lifted the UAV by hand and walked around to simulate flight to test the result of visual positioning. We recorded ‘local_position’ data in real time, published by PX4, and compared it to the data in ‘camera/odom/sample’ published by the camera. Although as the real position to the original point increased, the position error increased, the changing trend of positioning was consistent with the actual route taken, which showed that the coordinate system transformation in the TF tree was correct, and the positioning error in the original point was so small that it can be ignored. The test results were as below:

⑤We tried to control the UAV to take off and hover using a remote controller in POSITION mode, but after taking off, the UAV couldn’t hover at the origin point; it would move in a random direction from slow to fast, and then lose control. The pilot didn’t move the stick after taking off. We tried many times, but the result was similar. Below are the log files recorded:

Loss of visual information causes loss of control of the UAV:

Set the altitude positioning source as a range sensor. The UAV loses control:

Use visual positioning, lose control of the UAV:

Way 2: Publish topics.

①We modified px4_config.yaml, ‘vision_pose_estimate’: Change ‘listen’ from ‘True’ to ‘False’.

②We wrote a ROS node to publish positioning data to ‘/mavros/odometry/out’ and publish System status messages to ‘/mavros/companion_process/status’. We also changed the parameters related to camera location in ‘bridge.launch’. The document we refer to is:

https://docs.px4.io/main/en/computer_vision/visual_inertial_odometry.html#suggested-setup

The code is as below:

③Same as the one marked as ③ above.

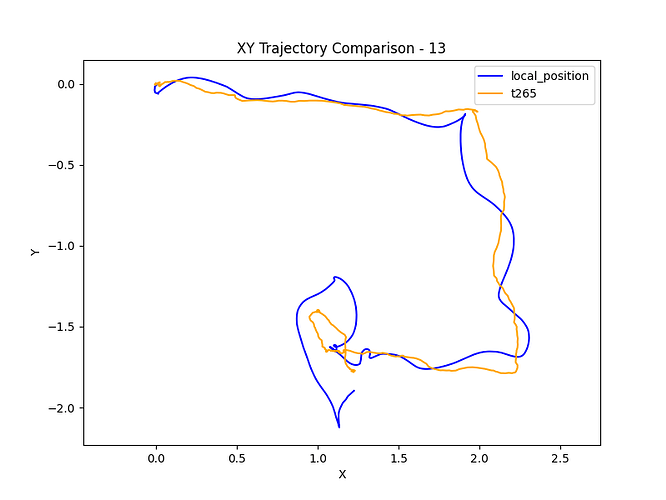

④Same as the one marked as ④ above. The test results are as below:

⑤We tried to control the UAV to take off and hover using a remote controller in POSITION mode, but after taking off, the UAV couldn’t hover at the origin; it would move in a random direction from slow to fast, and then lose control. The pilot doesn’t move the stick after taking off. We try many times, but the result is similar. Below are .ulg files recorded:

Use visual positioning, lose control of the UAV:

In the third stage, some questions are confusing us:

Q9. Are the two ways above both correct? If not, is the way itself wrong or a lack of steps?

Q10. In way 1, similar to Q5, we assume ‘map’ is FLU and ‘base_link’ is FLU. According to the officially given document (Using Vision or Motion Capture Systems for Position Estimation | PX4 Guide (v1.15)), ‘odom’ is FLU and ‘map’ is ENU (Is it correct?). Is it possible that the frame_id is ‘map’ and the flight fails? (Normally, we should use ‘odom’, but at first we think ‘map’ is ENU and set it as frame_id.).

Q11. In way 2, after opening mavros, you can see that the tf tree from ‘odom’ to ‘odom_frd’ and from ‘base_link’ to ‘base_link_frd’. If we want to set the other frame (such as ‘camero_odom’) as the world frame, is it feasible to broadcast tf tree from ‘camero_odom’ to ‘odom_frd’? Is there any other part in px4_config.yaml that should be modified? (part # odom?)

Q12. Are the parameters we set correct or not when using visual positioning? Are there some parameters which should be set but we have not set?

Q13. Why is there a main altitude positioning source, EFK2_HGT_REF, while there isn’t one in the horizontal direction?

Q14. What is the underlying logic behind EKF2’s fusion positioning? How does it determine whether a source is trustworthy during fusion? Is there a priority when using different positioning sources?

Q15. Is there a better protection measure when the location information is lost? (Besides switching to STABILIZE/ALTITUDE)

Unfortunately, the UAV was severely damaged during the experiment, so no more tests can be done in a short time, and thus, no more data can be provided now.

In addition to the above problems, if you find more errors when checking ULG files or have better suggestions, we will be very grateful!

Additional messages:

1.The version of PX4 firmware is 1.15.4, the version of mavros is 1.20.0, and the autopilot board is Holybro Pixhawk 4.

2. The onboard computer is Jetson Orin NX, and the operating system is Ubuntu 20.04.

3. The camera we used is T265, and the visual positioning algorithm is the camera’s built-in VIO algorithm.

4. The whole experiment is outdoors.